New Era of LLM Inference on Rebellions NPU

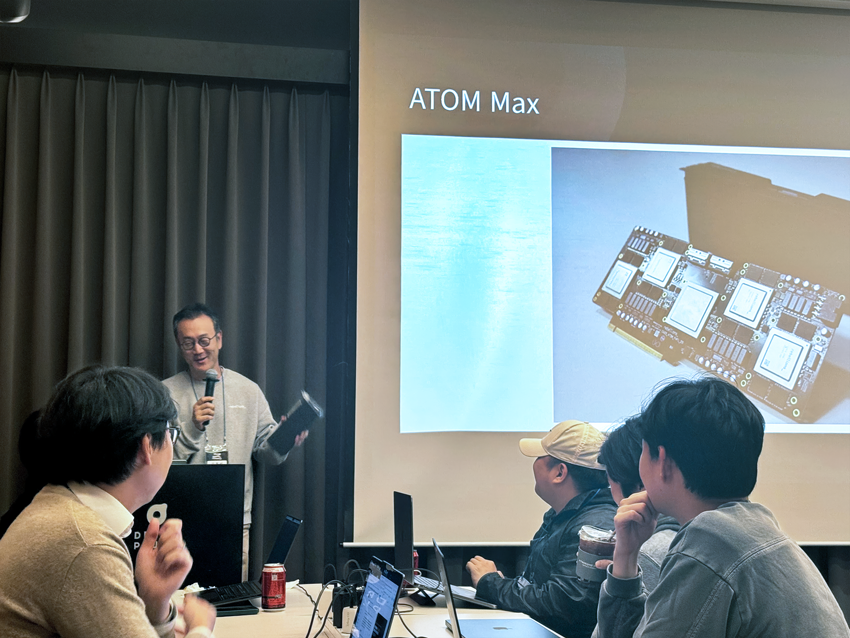

Following the vLLM Korea Meetup in August, Rebellions and SqueezeBits co-hosted the vLLM Hands-on Workshop on October 29. This marked the first-ever developer-focused, hands-on workshop led by a domestic NPU company—a rare opportunity for participants to directly experience NPU-based inference with vLLM.

A Chance to Demystify the NPU

According to post-event surveys, every participant rated the workshop “satisfactory” or higher. The most impactful part, by far, was the experience of running vLLM directly on the Rebellions NPU. Participants noted that those already familiar with PyTorch could follow along easily without additional setup.

It was also a rare opportunity to get clear answers to long-standing technical questions, such as:

- Does adopting an NPU require major changes to existing code or MLOps pipelines?

- How seamlessly does the NPU integrate with open AI frameworks like Hugging Face, PyTorch, and vLLM?

- How can complex MoE (Mixture-of-Experts) models be efficiently distributed across NPUs?

Through this hands-on experience, developers saw firsthand how Rebellions NPUs are evolving into developer-friendly yet high-efficiency AI inference infrastructure.

Familiar Developer Experience (DX)

To ensure smooth hands-on sessions, Rebellions built the lab environment on its ATOM™ NPU servers, with each participant connecting to a dedicated NPU node in a Kubernetes-based resource pool.

The first session began with a simple PyTorch Eager Mode exercise. Using the same code structure as on GPU, participants ran tensor operations on the NPU with just a single-line command—no extra libraries required. They then loaded and ran inference on the Phi-3 0.6B model using the standard Hugging Face Transformers library.

Next came the Graph Mode session, designed for production-level performance optimization. Using the RBLN Profiler and Perfetto visualization tool, participants examined NPU execution traces and pipeline structures at the tile level. By separately compiling Prefill and Decode graphs and applying optimized kernels such as FlashAttention and KV Caching, they observed significant speedups compared to Eager Mode.

The vLLM-Rebellions NPU Backend Plugin

The centerpiece of the workshop was the vLLM-Rebellions backend plugin, a torch.compile-based integration that brings vLLM to Rebellions hardware. The plugin implements Paged Attention and Continuous Batching directly on the NPU, optimizing for memory efficiency and throughput in real-world deployment scenarios.

It also provides an OpenAI-compatible API endpoint, allowing developers to reuse their existing API workflows while seamlessly leveraging Rebellions’ hardware acceleration. The key message: new hardware adoption doesn’t have to mean rewriting your codebase.

In the final session, a Mixture-of-Experts (MoE) distributed inference demo showcased the Qwen1.5-MoE model running on Rebellions NPUs, combining tensor and expert parallelism for efficient scaling. Rebellions is currently preparing official MoE support within vLLM-RBLN, continuing to enhance performance and scalability for large-scale distributed inference on NPUs.

Building an Open and Collaborative AI Ecosystem

The workshop went beyond just a demo, providing a full-fledged production-grade, Kubernetes-based environment. Participants experienced firsthand how Hugging Face, PyTorch, and vLLM integrate naturally on Rebellions NPUs.

Moving forward, Rebellions and SqueezeBits plan to run regular vLLM Hands-on Workshops over the next two years, helping Korean developers gain real, service-level experience with NPU-based vLLM deployment.

Rebellions remains committed to expanding the open-source AI ecosystem, strengthening the technical foundation for NPUs as a stable, scalable element of AI infrastructure, and ensuring that more developers can access, understand, and benefit from this next-generation compute platform.

Share This: